This is How to Build Haskell with GNU Make (and why it's worth trying)

In a previous article I showed the GHC commands you need to compile a basic Haskell executable without explicitly using the source files from its dependencies. But when you're writing your own Haskell code, 99% of the time you want to be using a Haskell build system like Stack or Cabal for your compilation needs instead of writing your own GHC commands. (And you can learn how to use Stack in my new free course, Setup.hs).

But part of my motivation for solving that problem was that I wanted to try an interesting experiment:

How can I build my Haskell code using GNU Make?

GNU Make is a generic build system that allows you to specify components of your project, map out their dependencies, and dictate how your build artifacts are generated and run.

I wanted to structure my source code the same way I would in a Cabal-style application, but rely on GNU Make to chain together the necessary GHC compilation commands. I did this to help gain a deeper understanding of how a Haskell build system could work under the hood.

In a Haskell project, we map out our project structure in the .cabal file. When we use GNU Make, our project is mapped out in the makefile. Here's the Makefile we'll ultimately be constructing:

GHC = ~/.ghcup/ghc/9.2.5/bin/ghc

BIN = ./bin

EXE = ${BIN}/hello

LIB_DIR = ${BIN}/lib

SRCS = $(wildcard src/*.hs)

LIB_OBJS = $(wildcard ${LIB_DIR}/*.o)

library: ${SRCS}

@mkdir -p ${LIB_DIR}

@${GHC} ${SRCS} -hidir ${LIB_DIR} -odir ${LIB_DIR}

generate_run: app/Main.hs library

@mkdir -p ${BIN}

@cp ${LIB_DIR}/*.hi ${BIN}

@${GHC} -i${BIN} -c app/Main.hs -hidir ${BIN} -odir ${BIN}

@${GHC} ${BIN}/Main.o ${LIB_OBJS} -o ${EXE}

run: generate_run

@${EXE}

TEST_DIR = ${BIN}/test

TEST_EXE = ${TEST_DIR}/run_test

generate_test: test/Spec.hs library

@mkdir -p ${TEST_DIR}

@cp ${LIB_DIR}/*.hi ${TEST_DIR}

@${GHC} -i${TEST_DIR} -c test/Spec.hs -hidir ${TEST_DIR} -odir ${TEST_DIR}

@${GHC} ${TEST_DIR}/Main.o ${LIB_OBJS} -o ${TEST_EXE}

test: generate_test

@${TEST_EXE}

clean:

rm -rf ./binOver the course of this article, we'll build up this solution piece-by-piece. But first, let's understand exactly what Haskell code we're trying to build.

Our Source Code

We want to lay out our files like this, separating our source code (/src directory), from our executable code (/app) and our testing code (/test):

.

├── app

│ └── Main.hs

├── makefile

├── src

│ ├── MyFunction.hs

│ └── TryStrings.hs

└── test

└── Spec.hsHere's the source code for our three primary files:

-- src/MyStrings.hs

module MyStrings where

greeting :: String

greeting = "Hello"

-- src/MyFunction.hs

module MyFunction where

modifyString :: String -> String

modifyString x = base <> " " <> base

where

base = tail x <> [head x]

-- app/Main.hs

module Main where

import MyStrings (greeting)

import MyFunction (modifyString)

main :: IO ()

main = putStrLn (modifyString greeting)And here's what our simple "Spec" test looks like. It doesn't use a testing library, it just prints different messages depending on whether or not we get the expected output from modifyString.

-- test/Spec.hs

module Main where

import MyFunction (modifyString)

main :: IO ()

main = do

test "abcd" "bcda bcda"

test "Hello" "elloH elloH"

test :: String -> String -> IO ()

test input expected = do

let actual = modifyString input

putStr $ "Testing case: " <> input <> ": "

if expected /= actual

then putStrLn $ "Incorrect result! Expected: " <> expected <> " Actual: " <> actual

else putStrLn "Correct!"The files are laid out the way we would expect for a basic Haskell application. We have our "library" code in the src directory. We have a single "executable" in the app directory. And we have a single "test suite" in the test directory. Instead of having a Project.cabal file at the root of our project, we'll have our makefile. (At the end, we'll actually compare our Makefile with an equivalent .cabal file).

But what does the Makefile look like? Well it would be overwhelming to construct it all at once. Let's begin slowly by treating our executable as a single file application.

Running a Single File Application

So for now, let's adjust Main.hs so it's an independent file without any dependencies on our library modules:

-- app/Main.hs

module Main where

main :: IO ()

main = putStrLn "Hello"The simplest way to run this file is runghc. So let's create our first makefile rule that will do this. A rule has a name, a set of prerequisites, and then a set of commands to run. We'll call our rule run, and have it use runghc on app/Main.hs. We'll also include the app/Main.hs as a prerequisite, since the rule will run differently if that file changes.

run: app/Main.hs

runghc app/Main.hsAnd now we can run this run using make run, and it will work!

$ make run

runghc app/Main.hs

HelloNotice that it prints the command we're running. We can change this by using the @ symbol in front of the command in our Makefile. We'll do this with almost all our commands:

run: app/Main.hs

@runghc app/Main.hsAnd it now runs our application without printing the command.

Using runghc is convenient, but if we want to use dependencies from different directories, we'll eventually need to use multiple stages of compilation. So we'll want to create two distinct rules. One that generates the executable using ghc, and another that actually runs the generated executable.

So let's create a generate_run rule that will produce the build artifacts, and then run will use them.

generate_run: app/Main.hs

@ghc app/Main.hs

run: generate_run

@./app/MainNotice that run can depend on generate_run as a prerequisite, instead of the source file now. This also generates three build artifacts directly in our app directory: the interface file Main.hi, the object file Main.o, and the executable Main.

It's bad practice to mix build artifacts with source files, so let's use GHC's arguments (-hidir, -odir and -o) to store these artifacts in their own directory called bin.

generate_run: app/Main.hs

@mkdir -p ./bin

@ghc app/Main.hs -hidir ./bin -odir ./bin -o ./bin/hello

run: generate_run

@./bin/helloWe can then add a third rule to "clean" our project. This would remove all binary files so that we can do a fresh recompilation if we want.

clean:

rm -rf ./binFor one final flourish in this section, we can use some variables. We can make one for the GHC compiler, referencing its absolute path instead of a symlink. This would make it easy to switch out the version if we wanted. We'll also add a variable for our bin directory and the hello executable, since these are used multiple times.

# Could easily switch versions if desired

# e.g. GHC = ~/.ghcup/ghc/9.4.4/bin/ghc

GHC = ~/.ghcup/ghc/9.2.5/bin/ghc

BIN = ./bin

EXE = ${BIN}/hello

generate_run: app/Main.hs

@mkdir -p ${BIN}

@${GHC} app/Main.hs -hidir ${BIN} -odir ${BIN} -o ${EXE}

run: generate_run

@${EXE}

clean:

rm -rf ./binAnd all this still works as expected!

$ generate_run

[1 of 1] Compiling Main (app/Main.hs, bin/Main.o)

Linking ./bin/hello

$ make run

Hello

$ make clean

rm -rf ./binSo we have some basic rules for our executable. But remember our goal is to depend on a library. So let's add a new rule to generate the library objects.

Generating a Library

For this step, we would like to compile src/MyStrings.hs and src/MyFunction.hs. Each of these will generate an interface file (.hi) and an object file (.o). We want to place these artifacts in a specific library directory within our bin folder.

We'll do this by means of a new rule, library, which will use our two source files as its prerequisites. It will start by creating the library artifacts directory:

LIB_DIR = ${BIN}/lib

library: src/MyStrings.hs src/MyFunction.hs

@mkdir -p ${LIB_DIR}

...But now the only thing we have to do is use GHC on both of our source files, using LIB_DIR as the destination point.

LIB_DIR = ${BIN}/lib

library: src/MyStrings.hs src/MyFunction.hs

@mkdir -p ${LIB_DIR}

@ghc src/MyStrings.hs src/MyFunction.hs -hidir ${LIB_DIR} -odir ${LIB_DIR}Now when we run the target, we'll see that it produces the desired files:

$ make library

$ ls ./bin/lib

MyFunction.hi MyFunction.o MyStrings.hi MyStrings.oRight now though, if we added a new source file, we'd have to modify the rule in two places. We can fix this by adding a variable that uses wildcard to match all our source files in the directory (src/*.hs).

LIB_DIR = ${BIN}/lib

SRCS = $(wildcard src/*.hs)

library: ${SRCS}

@mkdir -p ${LIB_DIR}

@${GHC} ${SRCS} -hidir ${LIB_DIR} -odir ${LIB_DIR}While we're learning about wildcard, let's make another variable to capture all the produced object files. We'll use this in the next section.

LIB_OBJS = $(wildcard ${LIB_DIR}/*.o)So great! We're producing our library artifacts. How do we use them?

Linking the Library

In this section, we'll link our library code with our executable. We'll begin by assuming our Main file has gone back to its original form with imports, instead of the simplified form:

-- app/Main.hs

module Main where

import MyStrings (greeting)

import MyFunction (modifyString)

main :: IO ()

main = putStrLn (modifyString greeting)We when try to generate_run, compilation fails because it cannot find the modules we're trying to import:

$ make generate_run

...

Could not find module 'MyStrings'

...

Could not find module 'MyFunction'As we went over in the previous article, the general approach to compiling the Main module with its dependencies has two steps:

1. Compile with the -c option (to stop before the linking stage) using -i to point to a directory containing the interface files.

2. Compile the generated Main.o object file together with the library .o files to produce the executable.

So we'll be modifying our generate_main rule with some extra steps. First of course, it must now depend on the library rule. Then our first new command will be to copy the .hi files from the lib directory into the top-level bin directory.

generate_run: app/Main.hs library

@mkdir -p ./bin

@cp ${LIB_DIR}/*.hi ${BIN}

...We could have avoided this step by generating the library artifacts in bin directly. I wanted to have a separate location for all of them though. And while there may be some way to direct the next command to find the headers in the lib directory, none of the obvious ways worked for me.

Regardless, our next step will be to modify the ghc call in this rule to use the -c and -i arguments. The rest stays the same:

generate_run: app/Main.hs library

@mkdir -p ./bin

@cp ${LIB_DIR}/*.hi ${BIN}

@${GHC} -i${BIN} -c app/Main.hs -hidir ${BIN} -odir ${BIN}

...Finally, we invoke our final ghc call, linking the .o files together. At the command line, this would look like:

$ ghc ./bin/Main.o ./bin/lib/MyStrings.o ./bin/lib/MyFunction.o -o ./bin/helloRecalling our LIB_OBJS variable from up above, we can fill in the rule in our Makefile like so:

LIB_OBJS = $(wildcard ${LIB_DIR}/*.o)

generate_run: app/Main.hs library

@mkdir -p ./bin

@cp ${LIB_DIR}/*.hi ${BIN}

@${GHC} -i${BIN} -c app/Main.hs -hidir ${BIN} -odir ${BIN}

@${GHC} ${BIN}/Main.o ${LIB_OBJS} -o ${EXE}And now our program will work as expected! We can clean it and jump straight to the make run rule, since this will run its prerequisites make library and make generate_run automatically.

$ make clean

rm -rf ./bin

$ make run

[1 of 2] Compiling MyFunction (src/MyFunction.hs, bin/lib/MyFunction.o)

[2 of 2] Compiling MyStrings (src/MyStrings.hs, bin/lib/MyStrings.o)

elloH elloHSo we've covered the library and an executable, but most Haskell projects have at least one test suite. So how would we implement that?

Adding a Test Suite

Well, a test suite is basically just a special executable. So we'll make another pair of rules, generate_test and test, that will mimic generate_run and run. Very little changes, except that we'll make another special directory within bin for our test artifacts.

TEST_DIR = ${BIN}/test

TEST_EXE = ${TEST_DIR}/run_test

generate_test: test/Spec.hs library

@mkdir -p ${TEST_DIR}

@cp ${LIB_DIR}/*.hi ${TEST_DIR}

@${GHC} -i${TEST_DIR} -c test/Spec.hs -hidir ${TEST_DIR} -odir ${TEST_DIR}

@${GHC} ${TEST_DIR}/Main.o ${LIB_OBJS} -o ${TEST_EXE}

test: generate_test

@${TEST_EXE}Of note here is that at the final step, we're still using Main.o instead of Spec.o. Since it's an executable module, it also compiles as Main.

But we can then use this to run our tests!

$ make clean

$ make test

[1 of 2] Compiling MyFunction (src/MyFunction.hs, bin/lib/MyFunction.o)

[2 of 2] Compiling MyStrings (src/MyStrings.hs, bin/lib/MyStrings.o)

Testing case: abcd: Correct!

Testing case: Hello: Correct!So now we have all the different components we'd expect in a normal Haskell project. So it's interesting to consider how our makefile definition would compare against an equivalent .cabal file for this project.

Comparing to a Cabal File

Suppose we want to call our project HaskellMake and store its configuration in HaskellMake.cabal. We'd start our Cabal file with four metadata lines:

cabal-version: 1.12

name: HaskellMake

version: 0.1.0.0

build-type: SimpleNow our library would expose its two modules, using the src directory as its root. The only "dependency" is the Haskell base packages. Finally, default-language is a required field.

library

exposed-modules:

MyStrings

, MyFunction

hs-source-dirs:

src

build-depends:

base

default-language: Haskell2010The executable would similarly describe where the files are located and state a base dependency as well as a dependency on the library itself.

executable hello

main-is: Main.hs

hs-source-dirs:

app

build-depends:

base

, HaskellMake

default-language: Haskell2010Finally, our test suite would look very similar to the executable, just with a different directory and filename.

test-suite make-test

type: exitcode-stdio-1.0

main-is: Spec.hs

hs-source-dirs:

test

build-depends:

base

, HaskellMake

default-language: Haskell2010And, if we add a bit more boilerplate, we could actually then compile our code with Stack! First we need a stack.yaml specifying the resolver and the package location:

# stack.yaml

resolver: lts-20.12

packages:

- .Then we need Setup.hs:

-- Setup.hs

import Distribution.Simple

main = defaultMainAnd now we could actually run our code!

$ stack build

$ stack exec hello

elloH elloH

$ stack test

Testing case: abcd: Correct!

Testing case: Hello: Correct!Now observant viewers will note that we don't use any Hackage dependencies in our code - only base, which GHC always knows how to find. It would require a lot of work for us to replicate dependency management. We could download a .zip file with curl easily enough, but tracking the whole dependency tree would be extremely difficult.

And indeed, many engineers have spent a lot of time getting this process to work well with Stack and Cabal! So while it would be a useful exercise to try to do this manually with a simple dependency, I'll leave that for a future article.

When comparing the two file definitions, Undoubtedly, the .cabal definition is more concise and human readable, but it hides a lot of implementation details. Most of the time, this is a good thing! This is exactly what we expect from tools in general; they should allow us to work more quickly without having to worry about details.

But there are times where we might, on our time, want to occasionally try out a more adventurous path like we've done in this article that avoids relying too much on modern tooling. So why was this article a "useful exercise"™?

What's the Point?

So obviously, there's no chance this Makefile approach is suddenly going to supplant Cabal and Stack for building Haskell projects. Stack and Cabal are "better" for Haskell precisely because they account for the intricacies of Haskell development. In fact, by their design, GHC and Cabal both already incorporate some key ideas and features from GNU Make, especially with avoiding re-work through dependency calculation.

But there's a lot you can learn by trying this kind of exercise.

First of all, we learned about GNU Make. This tool can be very useful if you're constructing a program that combines components from different languages and systems. You could even build your Haskell code with Stack, but combine it with something else in a makefile.

A case and point for this is my recent work with Haskell and AWS. The commands for creating a docker image, authenticating to AWS and deploying it are lengthy and difficult to remember. A makefile can, at the very least, serve as a rudimentary aliasing tool. You could run make deploy and have it automatically rebuild your changes into a Docker image and deploy that to your server.

But beyond this, it's important to take time to deliberately understand how our tools work. Stack and Cabal are great tools. But if they seem like black magic to you, then it can be a good idea to spend some time understanding what is happening at an internal level - like how GHC is being used under the hood to create our build artifacts.

Most of the fun in programming comes in effectively applying tools to create useful programs quickly. But if you ever want to make good tools in the future, you have to understand what's happening at a deeper level! At least a couple times a year, you should strive to go one level deeper in your understanding of your programming stack.

For me this time, it was understanding just a little more about GHC. Next time I might dive into dependency management, or a different topic like the internal workings of Haskell data structures. These kinds of topics might not seem immediately applicable in your day job, but you'll be surprised at the times when deeper knowledge will pay dividends for you.

Getting Better at Haskell

But enough philosophizing. If you're completely new to Haskell, going "one level deeper" might simply mean the practical ability to use these tools at a basic level. If your knowledge is more intermediate, you might want to explore ways to improve your development process. These thoughts can lead to questions like:

1. What's the best way to set up my Haskell toolchain in 2023?

2. How do I get more efficient and effective as a Haskell programmer?

You can answer these questions by signing up for my new free course Setup.hs! This will teach how to install your Haskell toolchain with GHCup and get you started running and testing your code.

Best of all, it will teach you how to use the Haskell Language Server to get code hints in your editor, which can massively increase your rate of progress. You can read more about the course in this blog post.

If you subscribe to our monthly newsletter, you'll also get an extra bonus - a 20% discount on any of our paid courses. This offer is good for two more weeks (until May 1) so don't miss out!

How to Make ChatGPT Go Around in Circles (with GHC and Haskell)

As part of my research for the recently released (and free!) Setup.hs course, I wanted to explore the different kinds of compilation commands you can run with GHC outside the context of a build system.

I wanted to know…

Can I use GHC to compile a Haskell module without its dependent source files?

The answer, obviously, should be yes. When you use Stack or Cabal to get dependencies from Hackage, you aren't downloading and recompiling all the source files for those libraries.

And I eventually managed to do it. It doesn't seem hard once you know the commands already:

$ mkdir bin

$ ghc src/MyStrings.hs src/MyFunction.hs -hidir ./bin -odir ./bin

$ ghc -c app/Main.hs -i./bin -hidir ./bin -odir ./bin

$ ghc bin/Main.o ./bin/MyStrings.o ./bin/MyFunction.o -o ./bin/hello

$ ./bin/hello

...But, being unfamiliar with the inner workings of GHC, I struggled for a while to find this exact combination of commands, especially with their arguments.

So, like I did last week, I turned to the latest tool in the developer's toolkit: ChatGPT. But once again, everyone's new favorite pair programmer had some struggles of its own on the topic! So let's start by defining exactly the problem we're trying to solve.

The Setup

Let's start with a quick look at our initial file tree.

├── app

│ └── Main.hs

└── src

├── MyFunction.hs

└── MyStrings.hsThis is meant to look the way I would organize my code in a Stack project. We have two "library" modules in the src directory, and one executable module in the app directory that will depend on the library modules. These files are all very simple:

-- src/MyStrings.hs

module MyStrings where

greeting :: String

greeting = "Hello"

-- src/MyFunction.hs

module MyFunction where

modifyString :: String -> String

modifyString x = base <> " " <> base

where

base = tail x <> [head x]

-- app/Main.hs

module Main where

import MyStrings (greeting)

import MyFunction (modifyString)

main :: IO ()

main = putStrLn (modifyString greeting)Our goal is to compile and run the executable with two constraints:

1. Use only GHC (no Stack or Cabal involved)

2. Compile the library separately, so that the Main module could be compiled using only the library's build artifacts, and not the source files.

Trying to Compile

Now, there are two easy ways to compile this code if we're willing to violate our constraints (particularly the second one). If all three files are in the same directory, GHC can immediately find the modules we're importing, so we can just call ghc Main.hs.

└── src

├── Main.hs

├── MyFunction.hs

└── MyStrings.hs

...

$ cd src

$ ghc Main.hs

$ ./Main

elloH elloHIf we keep the original file structure, it's still pretty easy if we're willing to violate constraint 2 by using the library source files in the ghc command:

$ ghc app/Main.hs src/MyStrings.hs src/MyFunction.hs

$ ./Main

elloH elloHHowever, with my extra constraint of not using the library source files directly, things got a little more difficult. I originally thought "Hey, let's just include the build artifacts in the GHC call." Each library module generates an interface file (.hi) and an object file (.o). So let's try including those in the GHC call:

$ ghc src/MyStrings.hs src/MyFunction.hs

$ ls -l src/

MyFunction.hi MyFunction.hs MyFunction.o MyStrings.hi MyStrings.hs MyStrings.o

$ ghc app/Main.hs src/MyFunction.hi src/MyFunction.o src/MyStrings.hi src/MyStrings.o

...

Could not find module 'MyStrings'

...

Could not find module 'MyFunction'As we can see, it doesn't work! Even though we're including the build artifacts as direct arguments of ghc, this doesn't help our main module to find them.

So, feeling a little too lazy to dig deep through GHC documentation, and feeling like this problem might have too many arbitrary constraints to phrase in a Google query, I decided to try our aforementioned new favorite tool.

Enter: ChatGPT

The First Session

(Conversation has been abbreviated for relevance)

Me

How do I link existing

.ofiles with a new source module using the 'ghc' command?

Chat GPT

To link existing object (

.o) files with a new source module using the ghc command, you need to include the object files in the list of input files passed to the ghc command.

Assuming you have an existing

.ofile calledexample.oand a new source module calledMyModule.hs, you can link them together into an executable program using the following command:

$ ghc -o MyProgram MyModule.hs example.oThis command tells ghc to compile and link the

MyModule.hssource file with theexample.oobject file and create an executable program calledMyProgram. The-oflag specifies the output file name.

Me

Is it possible to use a ghc command to link a Haskell object file (

.o) with a Haskell source file that imports the other module?

Chat GPT

Yes, it is possible to use the ghc command to link a Haskell object file (

.o) with a Haskell source file that imports the other module. Here's an example:

Suppose you have two files,

MyModule.hsandOtherModule.o, andMyModule.hsimports a module that is defined inOtherModule.o. To link the two files into an executable program, you can use the following command:

$ ghc -o MyProgram MyModule.hs OtherModule.oThis command tells ghc to compile and link the

MyModule.hssource file with theOtherModule.oobject file, and create an executable program calledMyProgram.

Me

Hmmm I don't think this works. I created the following file

OtherModule.hs:

module OtherModule where

greeting :: String

greeting = "Hello"And I'm trying to include it in another file:

module Main where

import OtherModule (greeting)

main :: IO ()

main = putStrLn greetingI started by compiling OtherModule:

$ ghc src/OtherModule.hsThen I moved

OtherModule.oto the app directory (withMyModule.hs), and tried running this command:

$ cd app

$ ghc -o MyProgram MyModule.hs OtherModule.oI get the error:

"Could not find module 'OtherModule'"

Chat GPT

I apologize for the confusion in my previous answer. You are correct that simply linking the object file with the executable does not work in this case.

When GHC compiles a module, it generates an interface file (

.hi) that contains information about the module's name, type signatures, and other details. The compiled object file (.o) contains the compiled machine code for the functions defined in the module.

When you try to import a module in a source file, GHC looks for the interface file (

OtherModule.hi) to find information about the module's name and type signatures. If the interface file is not found, GHC will raise an error.

In your case, when you compile

OtherModule.hs, GHC generates an interface file calledOtherModule.hi. To compileMyModule.hs, GHC needs to be able to findOtherModule.hiin order to resolve the import ofOtherModule. Simply linking theOtherModule.oobject file is not enough.

To make this work, you need to compile OtherModule.hs and MyModule.hs together, like this:

ghc -o MyProgram MyModule.hs src/OtherModule.hsThis command tells GHC to compile

MyModule.hsandOtherModule.hstogether, and create an executable program calledMyProgram.

I hope this clears up the confusion. Again, I apologize for the confusion in my previous answer.

Me

Ah, so I need to include both source files, and it can't be done by linking an object file with another source file?

Chat GPT

Yes, that's correct. When you import a module in a source file, GHC needs to be able to find the interface file (

.hi) for that module in order to resolve the import. If the interface file is not found, GHC will raise an error.

When you compile a source file with GHC, it generates both an object file (

.o) and an interface file (.hi). The object file contains the compiled machine code for the functions defined in the module, while the interface file contains information about the module's name, type signatures, and other details.

When you compile a source file that imports another module, GHC needs to be able to find the interface file for that module in order to compile the importing module. If the interface file is not found, GHC will raise an error.

So in your case, you need to compile both

MyModule.hsandOtherModule.hstogether so that GHC can generate the necessary interface files and link the resulting object files together to produce the executable program.

It is not possible to directly link an object file with a source file that imports it, as GHC needs the interface file to be present in order to compile the importing module.

Analysis

Suspiciously, ChatGPT suggests a less detailed command than the previous one that failed for me!

$ ghc -o MyProgram MyModule.hs OtherModule.oIt includes only the object file, instead of the object and interface file. And, of course this fails, like I showed above.

Now there's an interesting statement that will come into play later, though I didn't realize it!

Yes, that's correct. When you import a module in a source file, GHC needs to be able to find the interface file (

.hi) for that module in order to resolve the import. If the interface file is not found, GHC will raise an error.

I assumed that to "find" the interface file meant providing it on the command line (as in my original command). So with that idea having failed, I fixated on one part of the final statement:

It is not possible to directly link an object file with a source file that imports it...

And concluded that getting the right information from ChatGPT might be a lost cause. It seemed like it was sure this was possible, and then just as sure that it was impossible. But details matter, as we'll see!

Working Around It

So feeling slightly defeated, I decided to poke around the areas of the code where Cabal actually saves packages to see if there was a pattern I could follow. And I managed to cobble together a convoluted workaround.

What I observed was that GHC could use any module from a library in the Cabal package database. On my system's setup, this could be found in the .ghcup cache:

$ cd ~/.ghcup/ghc/9.2.5/lib/ghc-9.2.5/

$ ls

array-0.5.4.0/

base-4.16.4.0/

...

package.conf.d/

$ ls package.conf.d

array-0.5.4.0.conf

base-4.16.4.0.conf

...And indeed, the package directories did not have source files, only various forms of compiled object and interface files. And each package had a corresponding .conf file in the package.conf.d directory.

So I could...

- Make my own directory in the package database (

MyPackage-0.1.0.0) - Place the

.hiand.ofiles in there - Create a phony

MyPackage-0.1.0.0.confand put it inpackage.conf.d. (Base it on an existing.conffile but make some substitutions)

And this...technically...works. But it makes me feel a little bit like:

But manually manipulating a package database wasn't the solution I had in mind though. Surely there's something easier, right?

The Second Session

After a week of sitting on this, I decided to come back to ChatGPT and see if it had any other ideas. I decided to frame my question in a different way, stating a lot more details up front. Lo and behold, it had a different answer this time!

Me

Suppose I have a Haskell module called

MyStrings:

module MyStrings where

greeting :: String

greeting = "Hello"I can compile this module:

$ ghc MyStrings.hsThis generates an interface file,

MyStrings.hi, and an object file,MyStrings.o.

Now suppose I have an executable file,

Main.hs, that importsMyStrings:

module Main where

import MyStrings (greeting)

main :: IO ()

main = putStrLn greetingCan I use ghc to compile this Main file by using the

.hiand.ofiles, without including the imported source file,MyStrings.hsin the command?

Chat

Yes, you can use the

.hiand.ofiles generated by compilingMyStrings.hsto compileMain.hswithout includingMyStrings.hsin the command. To do so, you can use the-coption to tell GHC to compileMain.hsto an object file, and then use the-ooption to link the object files together into an executable:

$ ghc -c Main.hs

$ ghc Main.o MyStrings.o -o myprogramThe first command compiles

Main.hsto an object fileMain.o, without attempting to link it. The second command linksMain.owithMyStrings.oand creates an executable calledmyprogram. The.hifiles are not needed for linking, so you don't need to include them in the command.

Note that in order for this to work, the interface file

MyStrings.himust be present in the same directory asMain.hswhen you compile it. If you moveMyStrings.hito a different directory, you'll need to use the-ioption to tell GHC where to find it:

$ ghc -i/path/to/MyStrings -c Main.hs

$ ghc Main.o /path/to/MyStrings/MyStrings.o -o myprogramHere,

-i/path/to/MyStringstells GHC to look forMyStrings.hiin the directory/path/to/MyStrings.

Analysis

So there are two key ingredients that weren't mentioned at all in our first pass: the -c option and the -i option.

Using -c performs compilation without linking. With this option, GHC is able to produce an object file for our Main module without needing its dependent objects. So .o files are unnecessary in this stage.

We still need the .hi files though. But instead of providing them on the command line, we use the -i argument to supply them. It's an odd argument, because we put the path right after the i, without any spacing.

After we're done with the first phase, then we can link all our object files together.

Solving It

And sure enough, this approach works!

$ ghc src/MyStrings.hs src/MyFunction.hs

$ ghc -c app/Main.hs -i./src

$ ghc app/Main.o ./src/MyStrings.o ./src/MyFunction.o -o hello

$ ./hello

elloH elloHAnd if we want to be a little cleaner about putting our artifacts in a single location, we can use the -hidir and -odir arguments for storing everything in a bin directory.

$ mkdir bin

$ ghc src/MyStrings.hs src/MyFunction.hs -hidir ./bin -odir ./bin

$ ghc -c app/Main.hs -i./bin -hidir ./bin -odir ./bin

$ ghc bin/Main.o ./bin/MyStrings.o ./bin/MyFunction.o -o ./bin/hello

$ ./bin/hello

elloH elloHAnd we're done! Our program is compiling as we wanted it to, without our "Main" compilation command directly using the library source files.

Conclusion

So with that fun little adventure concluded, what can we learn from this? Well first of all, prompts matter a great deal when you're using a Chatbot. The more detailed your prompt, and the more you spell out your assumptions, the more likely you'll get the answer you're looking for. My second prompt was waaay more detailed than my first prompt, and the solution was much better as a result.

But a more pertinent lesson for Haskellers might be that using GHC by itself can be a big pain. So if you're a beginner, you might be asking:

What's the normal way to build Haskell Code?

You can learn all about building and running your Haskell code in our new free course, Setup.hs. This course will teach you the easy steps to set up your Haskell toolchain, and show you how to build and run your code using Stack, Haskell's most popular build system. You'll even learn how to get Haskell integrations in several popular code editors so you can learn from your mistakes much more quickly. Learn more about it on the course page.

And if you subscribe to our monthly newsletter, you'll get a code for 20% off any of our paid courses until May 1st! So don't miss out on that offer!

New Free Course: Setup.hs!

You can read all the Haskell articles you want, but unless you write the code for yourself, you'll never get anywhere! But there are so many different tools and ideas floating around out there, so how are you supposed to know what to do? How do you even get started writing a Haskell project? And how can you make your development process as efficient as possible?

The first course I ever made, Your First Haskell Project, was designed to help beginners answer these questions. But over the years, it's become a bit dated, and I thought it would be good to sunset that course and replace it with a new alternative, Setup.hs. Like its predecessor, Setup.hs is totally free!

Setup.hs is a short course designed for many levels of Haskellers! Newcomers will learn all the basics of building and running your code. More experienced Haskellers will get some new tools for managing all your Haskell-related programs, as well as some tips for integrating Haskell features into your code editor!

Here's what you'll learn in the course:

- How to install and manage all of the core Haskell tools (GHC, Cabal, Stack)

- What components you need in your Haskell project and how you can build and run them all

- How to get your editor to use advanced features, like flagging compilation errors and providing autocomplete suggestions.

We'll do all of this in a hands-on way with detailed, step-by-step exercises!

Improvements

Setup.hs makes a few notable updates and improvements compared to Your First Haskell Project.

First, it uses GHCup to install all the necessary tools instead of the now-deprecated Haskell Platform. GHCup allows for seamless switching between the different versions of all our tools, which can be very useful when you have many projects on your system!

Second, it goes into more details about pretty much every topic, whether that's project organization, Stack snapshots, and extra dependencies.

Third and probably most importantly, Setup.hs will teach you how to get Haskell code hints in three of the most common code editors (VS Code, Vim & Emacs) using Haskell Language Server. Even if these lectures don't cover the particular editor you use, they'll give you a great idea of what you need to search for to learn how. I can't overstate how useful these kinds of integrations are. They'll massively speed up your development and, if you're a beginner, they'll rapidly accelerate your learning.

If all this sounds super interesting to you, head over to the course page and sign up!

Data Structures: Heaps!

Today we finish our Data Structures series! We have one more structure to look at, and that is the Heap! This structure often gets overlooked when people think of data structures, since its role is a bit narrow and specific. You can look at the full rundown on the series page here. If you've missed any of our previous structure summaries, here's the list:

That's all for our Data Structures series! The blog will be back soon with more content!

Data Structures: Sequences!

Haskell's basic "list" type works like a singly linked list. However, the lack of access to elements at the back means it isn't useful for a lot of algorithms. A double ended linked list (AKA a queue) allows a lot more flexibility.

In Haskell, we can get this double-ended behavior with the Sequence type. This type works like lists in some ways, but it has a lot of unique operators and mechanics. So it's worth reading up on it here in the latest part of our Data Structures Series. Here's a quick review of the series so far:

We have one more structure to look at next time, so this series is going to bleed into August a bit, so make sure to come back next week!

Data Structures: Vectors!

Last week we started looking at less common Haskell data structures, starting with Arrays. Today we're taking one step further and looking at Vectors. These combine some of the performance mechanics of arrays with the API of lists. Many operations are quite a bit faster than you can find with lists.

For a full review, here's a list of the structures we've covered so far:

We've got two more structures coming up, so stay tuned!

Data Structures: Hash Maps!

Throughout this month we've been exploring the basics of many different data structures in Haskell. I started out with a general process called 10 Steps for understanding Data Structures in Haskell. And I've now applied that process to four common structures in Haskell:

Today we're taking the next logical step in the progression and looking at Hash Maps. Starting later this week, we'll start looking as lesser-known Haskell structures that don't fit some of the common patterns we've been seeing so far! So keep an eye on this blog page as well as the Data Structures Series page!

Data Structures: Hash Sets!

Last week I presented 10 Steps for understanding Data Structures in Haskell. In our new Data Structures Series you'll be able to see these steps applied to all the different data structures we can use in Haskell.

Today, marks the fourth article in this series, describing the Hash Set data type. If you missed the last few parts, you can also head to the Series Page and take a look!

Data Structures: Maps!

Last week I presented 10 Steps for understanding Data Structures in Haskell. In our new Data Structures Series you'll be able to see these steps applied to all the different data structures we can use in Haskell.

Today, we're debuting the third part of the series, looking at the Map data type. If you missed the last couple parts, you can also head to the Series Page and take a look!

Data Structures: Sets!

Earlier this week I presented 10 Steps for understanding Data Structures in Haskell. In our new Data Structures Series you'll be able to see these steps applied to all the different data structures we can use in Haskell.

Today, for the first time, you can take a look at the second part of this series, on the Set data type. If you missed the first part on lists, you can also find that over here.

10 Steps to Understanding Data Structures in Haskell

Last year I completed Advent of Code, which presented a lot of interesting algorithmic challenges. One thing these problems really forced me to do was focus more clearly on using appropriate data structures, often going beyond the basics.

And looking back on that, it occurred to me that I hadn't really seen many tutorials on Haskell structures beyond lists and maps. Nor in fact, had I thought to write any myself! I've touched on sequences and arrays, but usually in the context of another topic, rather than focusing on the structure itself.

So after thinking about it, I decided it seemed worthwhile to start doing some material providing an overview on all the different structures you can use in Haskell. So data structures will be our "blog topic" starting in this month of July, and probably actually going into August. I'll be adding these overviews in a permanent series, so each blog post over the next few Mondays and Thursdays will link to the newest installment in that series.

But even beyond providing a basic overview of each type, I thought it would be helpful to come up with a process for learning new data structures - a process I could apply to learning any structure in any langugage. Going to the relevant API page can always be a little overwhelming. Where do you start? Which functions do you actually need?

So I made a list of the 10 steps you should take when learning the API for a data structure in a new language. The first of these have to do with reminding yourself what the structure is used for. Then you get down to the most important actions to get yourself started using that structure in code.

The 10 Steps

- What operations does it support most efficiently? (What is it good at?)

- What is it not as good at?

- How many parameters does the type use and what are their constraints.

- How do I initialize the structure?

- How do I get the size of the structure?

- How do I add items to the structure?

- How do I access (or get) elements from the structure?

- If possible, how do I delete elements from the structure?

- How do I combine two of these structures?

- How should I import the functions of this structure?

Each part of the series will run through these steps for a new structure, focusing on the basic API functions you'll need to know. To see my first try at using this approach where I go over the basic list type, head over to the first part of the series! As I update the series with more advanced structures I'll add more links to this post.

If you want to quickly see all the APIs for the structures we'll be covering, head to our eBooks page and download the FREE Data Structures at a Glance eBook!

Hidden Identity: Using the Identity Monad

Last week we announced our new Making Sense of Monads course. If you subscribe to our mailing list in the next week, you can get a special discount for this and our other courses! So don't miss out!

But in the meantime, we've got one more article on monads! Last week, we looked at the "Function monad". This week, we're going to explore another monad that you might not think about as much. But even if we don't specifically invoke it, this monad is actually present quite often, just in a hidden way! Once again, you can watch the video to learn more about this, or just read along below!

On its face, the identity monad is very simple. It just seems to wrap a value, and we can retrieve this value by calling runIdentity:

newtype Identity a = Identity { runIdentity :: a }So we can easily wrap any value in the Identity monad just by calling the Identity constructor, and we can unwrap it by calling runIdentity.

We can write a very basic instance of the monad typeclass for this type, that just incorporates wrapping and unwrapping the value:

instance Monad Identity where

return = Identity

(Identity a) >>= f = f aA Base Monad

So what's the point or use of this? Well first of all, let's consider a lot of common monads. We might think of Reader, Writer and State. These all have transformer variations like ReaderT, WriterT, and StateT. But actually, it's the "vanilla" versions of these functions that are the variations!

If we consider the Reader monad, this is actually a type synonym for a transformer over the Identity monad!

type Reader a = ReaderT Identity aIn this way, we don't need multiple abstractions to deal with "vanilla" monads and their transformers. The vanilla versions are the same as the transformers. The runReader function can actually be written in terms of runReaderT and runIdentity:

runReader :: Reader r a -> r -> a

runReader action = runIdentity . (runReaderT action)Using Identity

Now, there aren't that many reasons to use Identity explicitly, since the monad encapsulates no computational strategy. But here's one idea. Suppose that you've written a transformation function that takes a monadic action and runs some transformations on the inner value:

transformInt :: (Monad m) => m Int -> m (Double, Int)

transformInt action = do

asDouble <- fromIntegral <$> action

tripled <- (3 *) <$> action

return (asDouble, tripled)You would get an error if you tried to apply this to a normal unwrapped value. But by wrapping in Identity, we can reuse this function!

>> transformInt 5

Error!

>> transformInt (Identity 5)

Identity (5.0, 15)We can imagine the same thing with a function constraint using Functor or Applicative. Remember that Identity belongs to these classes as well, since it is a Monad!

Of course, it would be possible in this case to write a normal function that would accomplish the simple task in this example. But no matter how complex the task, we could write a version relying on the Identity monad that will always work!

transformInt' :: Int -> (Double, Int)

transformInt' = runIdentity . transformToInt . Identity

...

>> transformInt' 5

(5.0, 15)The Identity monad is just a bit of trivia regarding monads. If you've been dying to learn how to really use monads in your own programming, you should sign up for our new course Making Sense of Monads! For the next week you can subscribe to our mailing list and get a discount on this course as well as our other courses!

Function Application: Using the Dollar Sign ($)

Things have been a little quiet here on the blog lately. We've got a lot of different projects going on behind the scenes, and we'll be making some big announcements about those soon! If you want to stay up to date with the latest news, make sure you subscribe to our mailing list! You'll get access to our subscriber-only resources, including our Beginners Checklist and our Production Checklist!

The next few posts will include a video as well as a written version! So you can click the play button below, or scroll down further to read along!

Let's talk about one of the unsung heroes, a true foot soldier of the Haskell language: the function application operator, ($). I used this operator a lot for a long time without really understanding it. Let's look at its type signature and implementation:

infixr 0

($) :: (a -> b) -> a -> b

f $ a = f aFor the longest time, I treated this operator as though it were a syntactic trick, and didn't even really think about it having a type signature. And when we look at this signature, it seems really basic. Quite simply, it takes a function, and an input to that function, and applies the function.

At first glance, this seems totally unnecessary! Why not just do "normal" function application by placing the argument next to the function?

add5 :: Int -> Int

add5 = (+) 5

-- Function application operator

eleven = add5 $ 6

-- Same result as "Normal" function application

eleven' = add5 6This operator doesn't let us write any function we couldn't write without it. But it does offer us some opportunities to organize our code a bit differently. And in some cases this is cleaner and it is more clear what is going semantically.

Grouping with $

Because its precedence is so low (level 0) this operator can let us do some kind of rudimentary grouping. This example doesn't compile, because Haskell tries to treat add5 as the second input to (+), rather than grouping it with 6, which appears to be its argument.

-- Doesn't compile!

value = (+) 11 add5 6We can group these together using parentheses. But the low precedence of ($) also allows it to act as a "separator". We break our expression into two groups. First we add and apply the first argument, and then we apply this function with the result of add5 6.

-- These work by grouping in different ways!

value = (+) 11 $ add5 6

value' = (+) 11 (add5 6)Other operators and function applications bind more tightly, so can have expressions like this:

value = (+) 11 $ 6 + 7 * 11 - 4A line with one $ essentially says "get the result of everything to the right and apply it as one final argument". So we calculate the result on the right (79) and then perform (+) 11 with that result.

Reordering Operations

The order of application also reverses with the function application operator as compared to normal function application. Let's consider this basic multiplication:

value = (*) 23 15Normal function application orders the precedence from left-to-right. So we apply the argument 23 to the function (*), and then apply the argument 15 to the resulting function.

However, we'll get an error if we use $ in between the elements of this expression!

-- Fails!

value = (*) $ 23 $ 15This is because ($) orders from right-to-left. So it first tries to treat "23" as a function and apply "15" as its argument.

If you have a chain of $ operations, the furthest right expression should be a single value. Then each grouping to the left should be a function taking a single argument. Here's how we might make an example with three sections.

value = (*) 23 $ (+10) $ 2 + 3Higher Order Functions

Having an operator for function application also makes it convenient to use it with higher order functions. Let's suppose we're zipping together a list of functions with a list of arguments.

functions = [(+3), (*5), (+2)]

arguments = [2, 5, 7]The zipWith function is helpful here, but this first approach is a little clunky with a lambda:

results = zipWith (\f a -> f a) functions argumentsBut of course, we can just replace that with the function application operator!

results = zipWith ($) functions arguments

results = [5, 25, 9]So hopefully we know a bit more about the "dollar sign" now, and can use it more intelligently! Remember to subscribe to Monday Morning Haskell! There will be special offers for subscribers in the next few weeks, so you don't want to miss out!

Haskellings Beta!

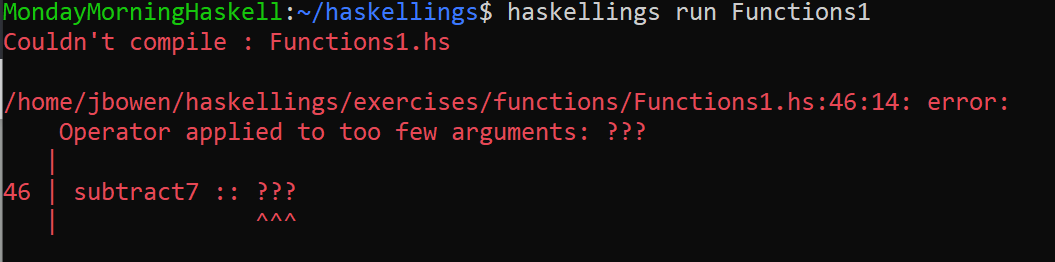

We spent a few months last year building the groundwork for Haskellings in this YouTube series. Now after some more hard work, we're happy to announce that Haskellings is now available in the beta stage. This program is meant to be an interactive tutorial for learning the Haskell language. If you've never written a line of Haskell in your life, this program is designed to help you take those first steps! You can take a look at the Github repository to learn all the details of using it, but here's a quick overview.

Overview

Haskellings gives you the chance to write Haskell code starting from the very basics with a quick evaluation loop. It currently has 50 different exercises for you to work with. In each exercise, you can read about a Haskell concept, make appropriate modifications, and then run the code with a single command to check your work!

You can do exercises individually, but the easiest way to do everything in order is to run the program in Watcher mode. In this mode, it will automatically tell you which exercise is next. It will also re-run each exercise every time you save your work.

Haskellings covers a decent amount of ground on basic language concepts. It starts with the very basics of expressions, types and functions, and goes through the basic usage of monads.

Haskellings is an open source project! If you want to report issues or contribute, learn how by reading this document! So go ahead, give it a try!

Don't Forget: Haskell From Scratch

Haskellings is a quick and easy way to learn the language basics, but it only touches on the surface of a lot of elements. To get a more in-depth look at the language, you should consider our Haskell From Scratch video course. This course includes:

- Hours of video lectures explaining core language concepts and syntax

- Dozens of practice problems to help you hone your skills

- Access to our Slack channel, so you can get help and have your questions answered

- A final mini-project, to help you put the pieces together

This course will help you build a rock-solid foundation for your future Haskell learnings. And even better, we've now cut the price in half! So don't miss out!

Beginners Series Updated!

Where has Monday Morning Haskell been? Well, to ring in 2021, we've been making some big improvements to the permanent content on the site. So far we've focused on the Beginners section of the site. All the series here are updated with improved code blocks and syntax highlighting. In addition, we've fully revised most of them and added companion Github repositories so you can follow along!

Liftoff

Our Liftoff Series is our first stop for Haskell beginners. If you've never written a line of Haskell in your life but want to learn, this is the place to start! You can follow along with all the code in the series by using this Github repository.

Monads Series

Monads are a big "barrier" topic in Haskell. They don't really exist much in most other languages, but they're super important in Haskell. Our Monads Series breaks them down, starting with simpler functional structures so you can understand more easily! The code for this series can be found on Github here.

Testing Basics

You can't do serious production development in any language until you've mastered the basics of unit testing. Our Testing Series will school you on the basics of writing and running your first unit tests in Haskell. It'll also teach you about profiling your code so you can see improvements in its runtime! And you can follow along with the code right here on Github!

Haskell Data Basics

Haskell's data types are one of the first things that made me enjoy Haskell more than other languages. In this series we explore the ins and outs of Haskell's data declaration syntax and related topics like typeclasses. We compare it side-by-side with other languages and see how much easier it is to express certain concepts! Take a look at the code here!

What's Next?

Next up we'll be going through the same process for some of our more advanced series. So in the next couple weeks you can look forward to improvements there! Stay tuned!

Dependencies and Package Databases

Here's the final video in our Haskellings series! We'll figure out how to add dependencies to our exercises. This is a bit trickier than it looks, since we're running GHC outside of the Stack context. But with a little intuition, we can find where Stack is storing its package database and use that when running the exercise!

Next week, we'll do a quick summary of our work on this program, and see what the future holds for it!

Adding Hints

If a user is struggling with a particular exercise, we don't want them to get stuck! In this week's video, we'll see how to add "hints" to the Watcher. This way, a user can get a little extra help when they need it! We can also use this functionality to add more features in the future, like skipping an exercise, or even asking the user questions as part of the exercise!

Executing Executables!

In Haskell, we'd like to think "If it compiles, it works!" But of course this isn't generally the case. So in addition to testing whether or not our exercises compile, we'll also want to run the code the user wrote and see if it works properly! In this week's video, we'll see how we can distinguish between these two different exercise types!

Testing the Watcher

In our Haskellings program, the Watcher is a complex piece of functionality. It has to track changes to particular files, re-compile them on the spot, and keep track of the order in which these exercises should be done. So naturally, writing unit tests for it presents challenges as well! In this video, we'll explore how to simulate file modifications in the middle of a unit test.

Unit Testing Compilation

We've used Hspec before on the blog. But now we'll apply it to our Haskellings program, in conjunction with the program configuration changes we made last week! We'll write a couple simple unit tests that will test the basic compilation behavior of our program. Check out the video to see how!